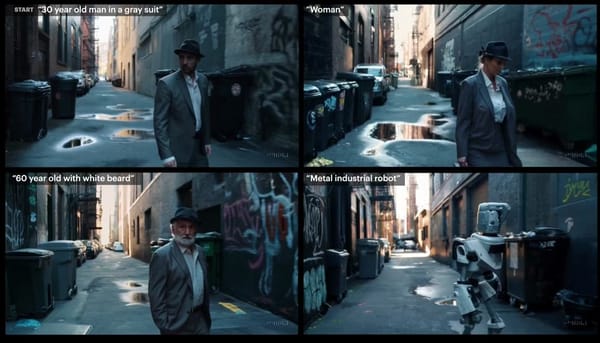

Generated by Sora OpenAI's text-to-video model 35

Notice anything strange? While Sora is a promising path towards building general purpose simulators of the physical world, the current model has weaknesses. It may struggle with accurately simulating the physics of a complex scene, and may not understand specific instances of cause and effect. For example, a person might take a bite out of a cookie, but afterward, the cookie may not have a bite mark.

The model may also confuse spatial details of a prompt, for example, mixing up left and right, and may struggle with precise descriptions of events that take place over time, like following a specific camera trajectory.

All of these videos were generated by Sora without modification.

Prompt 1: a glass cup falls to the floor, shattering.

Prompt 2: Basketball through hoop then explodes.

Prompt 3: Archeologists discover a generic plastic chair in the desert, excavating and dusting it with great care.

Prompt 4: Step-printing scene of a person running, cinematic film shot in 35mm.

Prompt 5: A grandmother with neatly combed grey hair stands behind a colorful birthday cake with numerous candles at a wood dining room table, expression is one of pure joy and happiness, with a happy glow in her eye.

She leans forward and blows out the candles with a gentle puff, the cake has pink frosting and sprinkles and the candles cease to flicker, the grandmother wears a light blue blouse adorned with floral patterns, several happy friends and family sitting at the table can be seen celebrating, out of focus. The scene is beautifully captured, cinematic, showing a 3/4 view of the grandmother and the dining room. Warm color tones and soft lighting enhance the mood *Sora is not yet available to the public. We’re sharing our research progress early to learn from feedback and give the public a sense of what AI capabilities are on the horizon.

OpenAI

OpenAI